The quantum computing landscape is evolving fast. As a physicist and innovation-focused writer, I want to explore how the major architectures — superconducting qubits, topological qubits, and photonic qubits — compare in terms of scalability, error resilience, materials challenges, and startup/industry readiness.

1. The Players at a Glance

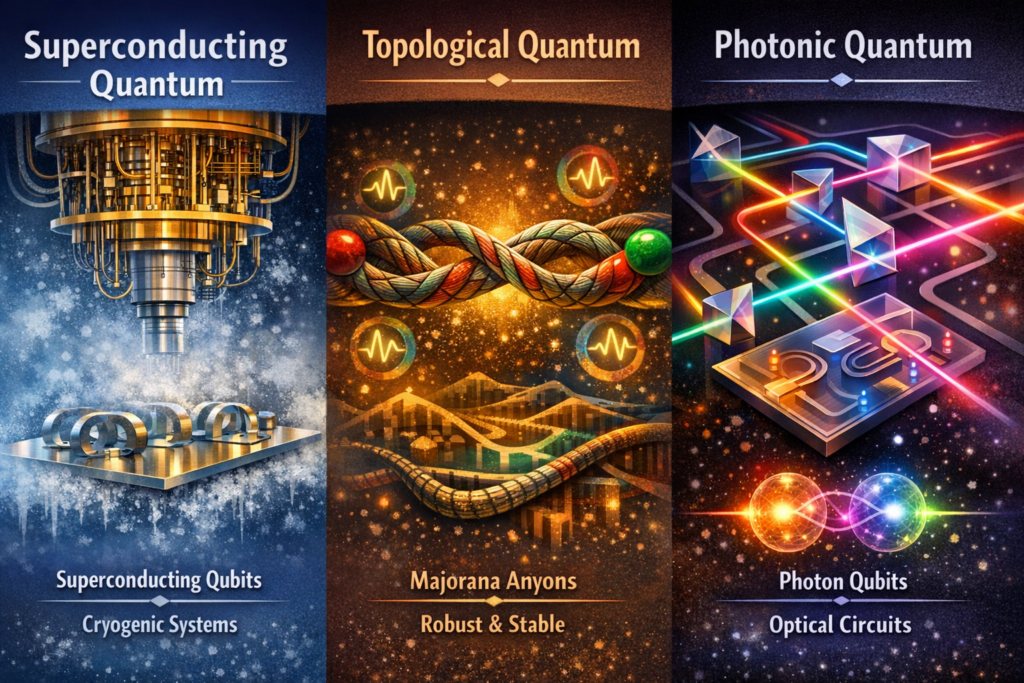

- Superconducting qubits: This is currently the most mature architecture. Companies like IBM, Google and Rigetti Computing use microwave-driven Josephson-junction circuits at milliKelvin temperatures.

- Topological qubits: A more nascent but potentially game-changing approach. The idea is to encode information in non-abelian anyons (e.g., Majorana zero modes) that are intrinsically protected from certain types of errors. Microsoft has been a notable backer.

- Photonic qubits: Using photons (in waveguides, optical fibres, integrated photonics) as carriers of quantum information. The benefits include room-temperature operation (in some cases), long coherence lengths, and ease of interconnectivity.

2. Scalability & Connectivity

Superconducting systems have made impressive progress: multi-qubit chips with tens of qubits, surface-code error correction experiments, and modular interconnect efforts. But they still require extreme cryogenics and complex error correction overheads.

Topological qubits promise a lower overhead for error correction if their promise is fulfilled — fewer physical qubits needed per logical qubit — but we are still in early experimental stages.

Photonic qubits shine in interconnects, and in potential for large-scale parallelism via integrated optics, but performing two-qubit gates, feed-forward, and deterministic photon sources remain significant challenges.

3. Error Rates & Noise Tolerance

In the superconducting world, gate fidelities are improving, but coherence times and cross-talk remain issues. The architecture relies heavily on error-correction layers.

Topological qubits aim for inherent error resilience: the non-local encoding reduces susceptibility to local decoherence. If realized, this could dramatically reduce resource overhead.

Photonic systems often deal with photon loss, mode-mismatch, and detector inefficiencies. But photon-based cluster-state models and photonic error correction offer promising directions.

4. Materials, Fabrication & Infrastructure

Superconducting qubits benefit from well-known microfabrication techniques (thin-film superconductors, Josephson junctions) and cryogenic infrastructure already used in e.g. dilution refrigerators. The challenge: variation, fabrication yield, scaling.

Topological qubits require exotic materials (e.g., superconductor-semiconductor hybrids, topological insulators, spin-orbit coupled nanowires) and exotic fabrication, often at the edge of current device physics.

Photonic qubits leverage integrated photonics (silicon photonics, silicon nitride, lithium niobate) — a strong ecosystem — but many devices require ultra-low loss, deterministic sources, and sophisticated routing.

5. Startup / Industry Readiness

Superconducting quantum computers are already being offered commercially (e.g., cloud access) and the ecosystem (tooling, software stacks) is maturing.

Topological quantum computers are still mostly at the research stage; significant engineering hurdles remain.

Photonic quantum computing and communications are also gaining traction — quantum networking, quantum key distribution, photonic chips — offering hybrid applications even before full fault-tolerant quantum computing.

6. Which Architecture for Which Use Case?

- If you’re looking for near-term quantum advantage in e.g. simulation of chemical systems, superconducting is the frontrunner.

- If you’re building for the long haul with an eye on fault tolerance and minimal overhead, topological may win — but it’s a longer-term bet.

- If connectivity, scaling via optical interconnects, or quantum networking are your focus, photonic architectures bring distinct advantages.

7. My Perspective: What to Watch

Given my physics background, I believe the big disruption will come when one architecture (or hybrid) crosses the fault-tolerance threshold with practical resource counts. We’ll need breakthroughs in materials (for topological), integrated photonic sources, cryogenic interconnects, and software/hardware co-design.

For startups: align with architectures that match your time-horizon, risk tolerance, and ecosystem. If you aim for the next 3 – 5 years, superconducting and photonic make sense; for 5-10 years and beyond, keep an eye on topological.

For investors: diversify across architectures, because the “winning” design may come from unexpected quarters, possibly a hybrid model combining photonics for interconnects and superconductors for processing, or major leaps in error-correction algorithms.

Conclusion

The quantum computing race is multi-faceted. Superconducting is leading now, topological holds promise for the future, and photonic brings interconnect and networking advantages. Understanding the trade-offs — physical implementation, error-rates, scaling, materials, startup readiness — is essential for entrepreneurs, investors and technologists. As quantum technologies mature, the architecture you choose will shape your strategic position.

This blog post was written and photos are made with the assistance of Gemini, Copilot and ChatGPT, Sora based on ideas and insights from Edgar Khachatryan.